May 31, 2017 | Data Privacy, Freedom of information, Internet regulation, Journalism, News media, Privacy

With the General Election fast-approaching, we have collected together a few blog posts from around the web that consider what the party manifestos say about information law and policy. The ICLR, General Election 2017: what the party manifestos say about law and...

May 31, 2017 | Freedom of information, Government policy, News media

In response to a Cabinet Office request in 2015, the Law Commission has been reviewing relevant statutes – the Official Secrets Act in particular – to “examine the effectiveness of the criminal law provisions that protect Government information from unauthorised...

May 30, 2017 | Access to information, Access to justice, Freedom of information, ILPC Research and Publications, News media, Privacy

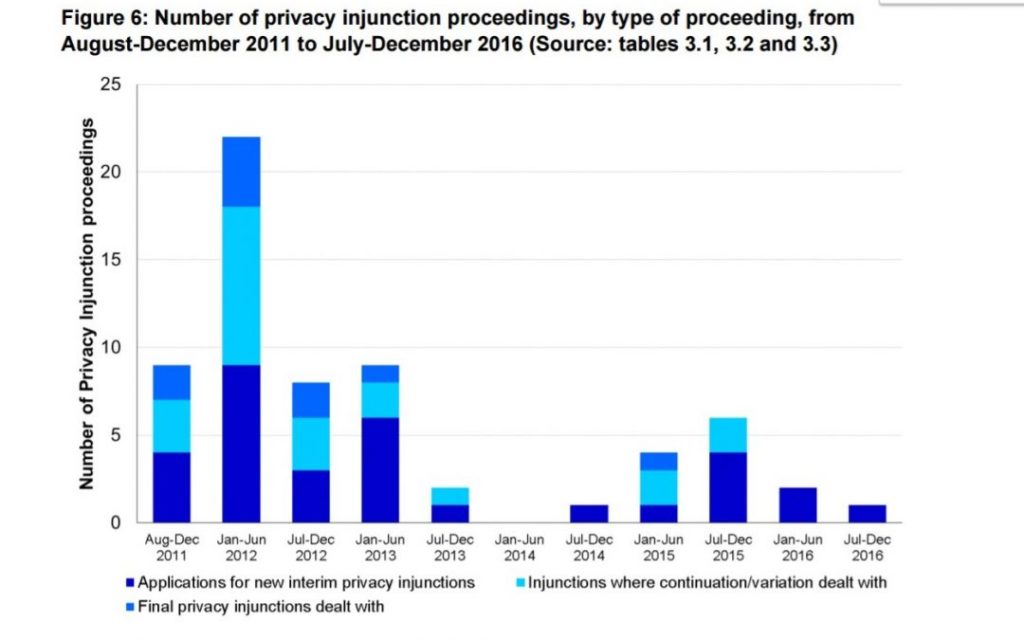

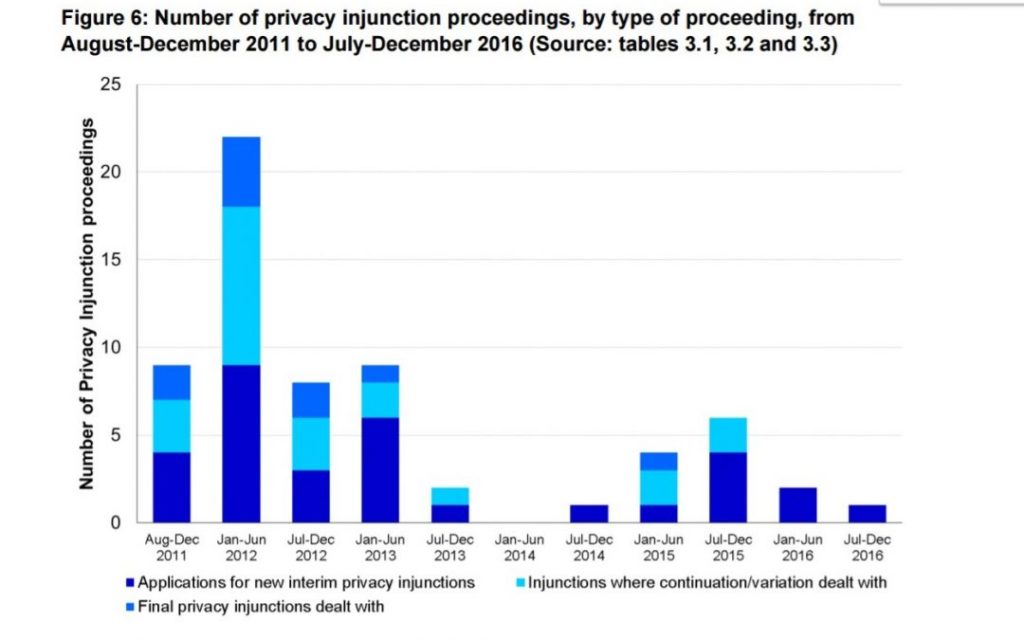

Dr Judith Townend highlights the difficulties in accessing accurate data on privacy injunctions as part of a submission made on behalf of the Transparency Project to the Queen’s Bench ‘Media List’ consultation . Dr Townend is a Lecturer in Media and Information Law,...

May 24, 2017 | Government policy, Privacy

This event took place at the Information Law and Policy Centre at the Institute of Advanced Legal Studies on Monday, 5 June 2017. Date: 5 June 2017 Time: 18:00 to 20:00 Venue: Institute of Advanced Legal Studies, 17 Russell Square, London WC1B 5DR Book: Online on the...

May 24, 2017 | Government policy, Surveillance

This event took place at the Information Law and Policy Centre at the Institute of Advanced Legal Studies on Monday, 26 June 2017. Date: 26 June 2017 Time: 17:00 to 19:00 Venue: Institute of Advanced Legal Studies, 17 Russell Square, London WC1B 5DR Book: Online on...